Identity Review | Global Tech Think Tank

Keep up with the digital identity landscape.

The momentum of AI ban advocacy is rooted in the ethical, social, economic, and political challenges of the new technology. While many herald AI as the pathway to a new technological age, others call for restraint, regulation, or even a full ban on certain applications. From interviews with top experts like Dr. David Barnes, the Chief AI Ethics Officer of the U.S. Army, to rankings of prominent AI Ethicists, Identity Review continues to deeply engage in the discussion surrounding the ethical implications of AI.

Key Areas of Concern:

This dichotomy between innovation and destruction raises significant questions about how AI should be governed, if not erased.

Elon Musk, social media juggernaut and tech billionaire CEO of Tesla and SpaceX, has been vocal about his concerns over unregulated AI, going as far as to state, “AI is a fundamental risk to the existence of human civilization.” Musk advocates for proactive regulation before it becomes a reactionary response to potential issues. Musk, along with many other prominent AI ethicists, recently signed an open letter calling to halt any progress of AI-systems more powerful than GPT-4 for six months. This call-to-action reveals a strong discontent with how rapidly artificial intelligence is outpacing regulation.

This international coalition seeks to prohibit the use of autonomous weapons systems that could make kill decisions without human intervention. Founded in 2012, the collective argues that lethal autonomous weapons would cross a moral line and have found success campaigning at the UN level. From releasing their very own documentary on the dangers of this automation in combat titled “Immoral Code,” to being nominated for a Nobel Peace Prize in 2020 by Norwegian MP Audun Lysbakken, the campaign has grown exponentially since its inception.

Geoffrey Hinton, often referred to as the “Godfather of Deep Learning,” has expressed concerns over the application of AI in certain areas. Though a proponent of AI and one of its pioneering researchers, Hinton has made statements emphasizing the need for caution, particularly regarding the military use of AI. He believes in the tremendous potential of the technology, but has also advocated for ethical considerations and responsible deployment. In fact, Hinton signed the same open letter for a temporary AI ban as Elon Musk earlier this year, and his nuanced views add significant weight to the broader conversation around the regulation of AI.

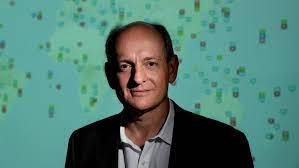

Al-Khalili is a distinguished Iraqi-British theoretical physicist, academic, author, and broadcaster. He is a professor of Theoretical Physics at the University of Surrey and has contributed significantly to the field of quantum mechanics.

Al-Khalili’s interest in AI extends beyond the realm of physics, bridging into the social and ethical dimensions of technology. He has been an articulate and vocal critic of unregulated advancements in AI, particularly in the context of warfare. The physicist has consistently called for the ban of lethal autonomous weapons systems (LAWS), fearing the profound moral and humanitarian implications of allowing machines to make life-and-death decisions on the battlefield without human intervention. In various interviews and public statements, he has stressed that these autonomous weapons could fundamentally change the nature of warfare and pose significant risks to civilian populations.

The broadcaster is well known for his ability to communicate complex scientific ideas to the public. As the host of various science documentaries and the long-running BBC Radio 4 program “The Life Scientific,” Al-Khalili has used his platform to engage in dialogues about the societal and ethical implications of AI. In addition to his prominence on the radio, Al-Khalili is also an influential voice in science policy. As a Fellow of the Royal Society and a member of various advisory bodies, Al-Khalili is positioned to impact the regulations and standards governing AI and other emerging technologies.

Stuart Russell, a computer science professor at UC Berkeley, co-authored the leading textbook on AI titled Human Compatible and has expressed concerns over super-intelligent AI. He supports the ban on autonomous weapons and calls for a more responsible approach to AI development.

In his book, he explains, “Even if the technology is successful, the transition to widespread autonomy will be an awkward one: human driving skills may atrophy or disappear, and the reckless and antisocial act of driving a car oneself may be banned altogether.”

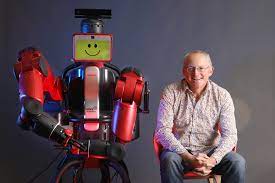

A Prominent AI researcher and Professor of Artificial Intelligence at the University of New South Wales, Toby Walsh has been at the forefront of calling for a ban on lethal autonomous weapons. In 2015, he led an open letter, signed by thousands of AI and robotics researchers, calling for a preemptive ban on autonomous weapons. He argues that these weapons could be a new class of warfare that would lower the barrier to conflicts and be used in unintended ways.

In his book, “2062: The World that AI Made,” Walsh discusses the broader implications of AI and underscores the urgent need for regulations, including outright bans on certain applications of AI, to ensure that the technology aligns with human values.

Walsh’s strong stance on banning specific AI applications and his leadership in rallying the global AI community reflect a targeted and decisive perspective within the debate over AI governance.

Max Tegmark, a physicist and co-founder of the Future of Life Institute, has actively campaigned for responsible AI development. He’s been a central figure in mobilizing the scientific community to consider the long-term impact of AI. In his book, “Life 3.0: Being Human in the Age of Artificial Intelligence,” Tegmark explores various future scenarios related to AI and emphasizes the importance of aligning AI development with human values. Additionally, he has called for cooperation among researchers to avoid a competitive race without safety considerations.

Tegmark’s work has been influential in bringing together AI researchers, policymakers, and the public to engage in a thoughtful dialogue on the future of AI. His position is one of optimism about AI’s potential but with a strong emphasis on ethical considerations, risk management, and global cooperation. His company, the Future of Life Institute, birthed the infamous open letter to ban AI progress for six months with cosigners such as Elon Musk and Geoffrey Hinton.

Nick Bostrom, a Swedish philosopher, professor at the University of Oxford, and the director of the Future of Humanity Institute, has been a leading voice on existential risks and the ethical implications of advanced artificial intelligence.

Though not calling for an outright ban on AI, Bostrom has extensively written about the potential risks associated with super-intelligent AI systems. In his well-known book “Superintelligence: Paths, Dangers, Strategies,” he explores scenarios where AI might surpass human intelligence, detailing potential hazards and proposing strategies to ensure that AI development remains aligned with human values. Bostrom advocates for rigorous research into AI safety and ethics, careful governance, and international cooperation to mitigate potential risks.

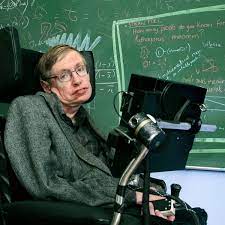

Dr. Stephen Hawking, a distinguished theoretical physicist, cosmologist, and author, was renowned for his groundbreaking contributions to our understanding of the universe, particularly his pioneering work in the fields of black holes, quantum gravity, and the nature of time. However, the late physicist was also one of the first public figures to sound the alarm on Artificial Intelligence dangers. He expressed great concerns over AI, stating, “The development of full artificial intelligence could spell the end of the human race.” Hawking’s fears were rooted in the possibility that AI could surpass human intelligence and reach a point of no return.

The advocacy for an AI ban encompasses a diverse array of voices, concerns, and motivations. From the fears of super-intelligent AI posed by thought leaders like Elon Musk to the calls for a ban on killer robots by international coalitions, the debate is complex and multifaceted.

What unites these diverse perspectives is a shared understanding of the profound impact AI may have on our society and a desire to approach its development with caution, ethics, and a keen eye toward potential risks.

The responses of governments, coupled with ongoing public discourse, point to a future where AI is likely to be governed by a blend of regulation, self-imposed industry standards, and international agreements. As the rate of advancements in AI-systems skyrockets, the notion of an AI ban may not be all too radical.

ABOUT IDENTITY REVIEW

Identity Review is a digital think tank dedicated to working with governments, financial institutions and technology leaders on advancing digital transformation, with a focus on privacy, identity, and security. Want to learn more about Identity Review’s work in digital transformation? Please message us at team@identityreview.com. Find us on Twitter.

RELATED STORIES